Audio Tracker demo

Audio Record capture demonstration via Android SDK

ACR Audio Tracker demo

(using Alphonso SDK 2.0.46 (third) example of capturing spoken phonetic alphabet)

Introduction

The research published on this page is one part of an ongoing project started in 2015 that initially focussed on near ultra-high frequency (NUHF) audio beacon signals directed at mobile devices for tracking purposes. The project, known as PilferShush, has resulted in an audio counter-surveillance Android app that is free, open source and available to download from either the Google Play or F-Droid stores. A research page hosted on the City Frequencies website is the primary location for information regarding this research while more general research is posted to the @cityfreqs Twitter account.

The question “is my device listening to me?” has been asked many times over the past few years. To determine an answer to this question a demonstration is examined here that uses an Android mobile phone and a game app downloaded for free from Google Play. This demonstration is somewhat technical in nature but is here to allow people to examine both the broader concepts and some of the specificities that may encourage similar research as either a form of confirmation or for additional insights.

The following investigation demonstrates that the Alphonso Software Development Kit (SDK) included in the game app records audio using the device microphone and then packages that audio into 64kb files suitable for uploading to servers. Whether this app and its SDK does upload these audio files is primarily determined by the location of the device (Alphonso press statements suggest they only enable this in the USA) and a simple boolean switch in an XML file.

One of the reasons Alphonso might upload the audio is so that they can use the processing power of their servers to run proprietary code that creates new fingerprints to add to their database. An example of this reasoning can be found in Bloomberg’s recent article on the Amazon Alexa device that shows that despite the hyperbolic marketing statements heralding the power of “machine learning” and “A.I.” there is still a great need for cheap, exploitable human labour to serve as “mechanical Turks”.

Method

After installing the app, approving the permissions and then running it for the first time, the phonetic alphabet was spoken out loud in the vicinity of the mobile device. A ten second portion of this spoken alphabet is captured by the SDK using its default settings and subdivided into three 64kb files of raw audio data. Importing the three raw audio files into Audacity allowed the audio to be played back legibly.

The app, known to contain the Alphonso SDK, has the RECORD_AUDIO permission (as listed on the store page). This SDK is used for Audio Content Recognition (ACR) and cross device tracking (XDT). ACR is a technique that attempts to derive and match unique aspects (fingerprint) of a given audio sample with a database of known fingerprints.

ACR

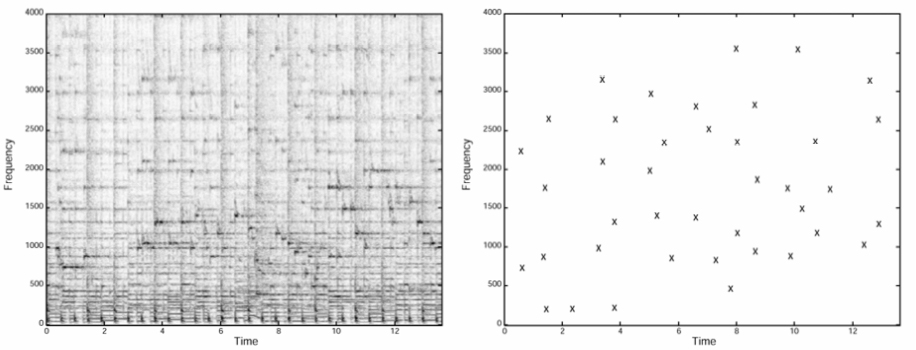

Audio Content Recognition is a system that generates an identifiable and simple “fingerprint” of a complex audio signal. A simplified example is the way the Shazaam app listens to a song that is playing and can then identify the title and artist. To do this, the company offering the service creates a database of audio fingerprints generated by processing audio files through a particular algorithm. This algorithm creates a fingerprint based upon key features of the audio as determined by a spectrogram.

The left side of the above image shows the initial spectrogram of the audio as a representation of frequencies over time. The right side shows an example of key features being identified, here it is the intensity, or amplitude, of a given frequency as represented by the ‘X’ marks. Taking a section of these amplitudes and representing them mathematically as a relatively small number provides the fingerprint which can then be stored in a database or easily transmitted over the internet.

Using a similar methodology to the one described above, several ACR companies have created SDKs that are embedded within smart phone apps for the purpose of tracking what television shows and adverts are watched. The recordings usually last a few seconds (5 - 15 secs) and are processed on the device to generate a “finger print” of the audio. This fingerprint is then sent via a network connection to servers for querying. Most of these companies provide analytics to their clients so that they can assess what adverts are being seen and by whom.

Expanding on this initial purpose, several companies are also using this ACR technique to record and determine what other sounds are present, especially in the background. One example is the La Liga Soccer app that is triggered by GPS location and records audio to determine whether the device is in the vicinity of an unauthorised broadcast of a soccer match - Engadget La Liga app article.

Another future trend can be found in a Facebook patent application that seeks to record and fingerprint background, ambient sounds to provide a context for devices and their usage - Fastcompany Facebook ambient audio patent article. This article also discusses other uses of this technique by companies such as Alphonso and Cambridge Analytica.

XDT

Cross device tracking is primarily used by advertisers and marketers as a method of measuring where and how effective their advert dollar spends are. The intention is to prove that, for example, a company’s expensive US NFL Superbowl commercial was seen by n number of eyes and that a subset of that number then went on to view the company’s website and (ideally) purchase something. Another part of this concept is to promote what is referred to as nudging which suggests that it is possible for a given marketing campaign to “direct” the viewer in a particular direction. A useful reading on this concept can be found in Online Manipulation: Hidden Influences in a Digital World.

Wikipedia has a simple definition quoted below:

Cross device tracking is a technique in which technology companies

and advertisers deploy trackers, often in the form of unique identifiers,

cookies, or even ultrasonic signals, to generate a profile of users

across multiple devices, not simply one. For example, one such form

of this tracking uses audio beacons, or inaudible sounds, emitted by

one device and recognized through the microphone of the other device.

APP INSTALL

In this particular investigation the Android game app is downloaded to a specific Android device as well as onto a computer via Raccoon to enable static analysis using jadx-gui.

Link to Google Play store game app: Victoria Aztec Hidden Object

Google Play app store listing includes an Alphonso SDK integration statement:

This app is integrated with Alphonso software. Subject to your permission,

the Alphonso software receives short duration audio samples from the

microphone on your device. The audio samples never leave your device,

but are irreversibly encoded (hashed) into digital "fingerprints." The

fingerprints are compared off-device to commercial content (e.g., TV,

OTT programming, ads music etc.). If a match is found, then appropriate

recommendation for content or ads may be delivered to your mobile device.

The Alphonso software only matches against known audio content and does

not recognize or understand human conversations or other sounds.

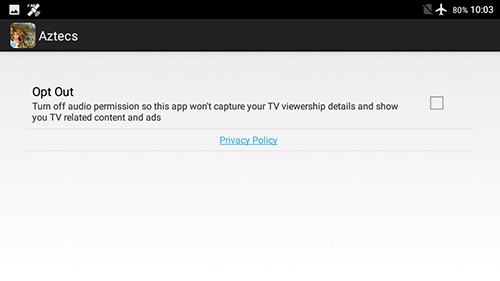

Running the app allows access to this “Settings” page:

The settings page also provides a link to the privacy policy of the game developer: Molu Apps Privacy Policy.

Permissions requested by the app at install are shown to the user, as well as a brief reasoning:

"This app uses audio to detect TV ads and content and shows appropriate mobile ads"

"android.permission.RECORD_AUDIO"

"android.permission.ACCESS_COARSE_LOCATION"

APK Analysis

A page describing how an Android app can be analysed is found on the Exodus Privacy website.

Knowing that this app will use the record audio function at some point not related to the gameplay, the next step is to try and determine when that might occur. Recording audio on an Android phone is a function that does not use a large amount of battery power or CPU processing cycles nor storage if only a few files are kept and constantly written over with fresh recordings. However, recording useful audio on an Android device is problematic especially when the intent is to do some sort of processing to that audio to determine its useful characteristics. In this particular case, the specific processing (ACR) is performed to try an identify features of that recorded audio. These specific features are the types of sounds derived from adverts and TV programmes which can be assumed to consist of either music, talking, sound effects or even abstract sounds.

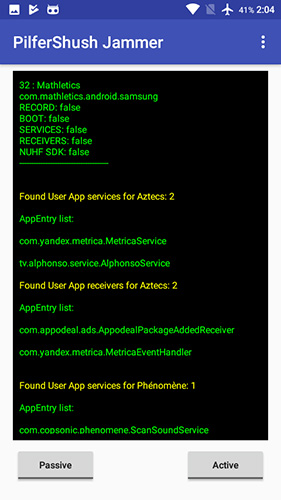

One of the first methods to reduce the amount of possible useless recordings is to restrict the times that the SDK performs the record audio method. As we are dealing with a cross device tracking SDK that triggers adverts, one of the first checks is whether or not the device is actively being used. To help with this the PilferShush Jammer app, which contains a background services scanner, is used to list any services a particular app has that can run in the background even when the parent app is not running. The service in this case is called tv.alphonso.service.AlphonsoService :

The AlphonsoService calls sets various parameters including “deviceId”, “androidId”, “adId”, “uuId” and a “PrimeTimeArray”.

public void initializePrimeTimeArray() {

this.mPrimeTimeArray = new PrimeTime[5];

this.mPrimeTimeArray[0] = new PrimeTime();

this.mPrimeTimeArray[1] = new PrimeTime();

this.mPrimeTimeArray[2] = new PrimeTime();

this.mPrimeTimeArray[3] = new PrimeTime();

this.mPrimeTimeArray[4] = new PrimeTime();

this.mPrimeTimeArray[0].asFSMBeginEvent = 47;

this.mPrimeTimeArray[0].asFSMEndEvent = 48;

this.mPrimeTimeArray[1].asFSMBeginEvent = 49;

this.mPrimeTimeArray[1].asFSMEndEvent = 50;

this.mPrimeTimeArray[2].asFSMBeginEvent = 51;

this.mPrimeTimeArray[2].asFSMEndEvent = 52;

this.mPrimeTimeArray[3].asFSMBeginEvent = 53;

this.mPrimeTimeArray[3].asFSMEndEvent = 54;

this.mPrimeTimeArray[4].asFSMBeginEvent = 55;

this.mPrimeTimeArray[4].asFSMEndEvent = 56;

}

The primeTimeArray is created and set by tv.alphonso.service.PrimeTime which determines when to begin and end any audio captures.

public PrimeTime() {

this.begin = "";

this.end = "";

this.captureCount = -1;

this.captureScenarioSleepInterval = -1;

this.captureScenarioSleepIntervalMax = -1;

this.captureScenarioSleepIntervalLivetv = -1;

this.captureScenarioSleepIntervalInhibiterIncrement = -1.0d;

}

It also calls tv.alphonso.service.LocationService which periodically reports the device location to a server.

public void sendLocationUpdate(Location location) {

Bundle params = new Bundle();

params.putParcelable("location", location);

if (this.mAlphonsoClient != null) {

Message msg = this.mAlphonsoClient.mHandler.obtainMessage();

msg.what = 3;

msg.setData(params);

if (debug) {

Log.d(TAG, "Sending Location Update to AlphonsoClient.");

}

this.mAlphonsoClient.mHandler.sendMessage(msg);

}

if (this.mProvClient != null) {

this.mProvClient.processLocationUpdate();

}

}

Location services are also used to determine whether the device is stationary, which can be a good indication that the user is sitting down and looking at the screen. The file tv.alphonso.utils.Utils has some relevant code:

locBundle.put("latitude", Double.valueOf(loc.getLatitude()));

locBundle.put("longitude", Double.valueOf(loc.getLongitude()));

locBundle.put("altitude", Double.valueOf(loc.getAltitude()))

locBundle.put("speed", Float.valueOf(loc.getSpeed()));

locBundle.put("bearing", Float.valueOf(loc.getBearing()));

locBundle.put("accuracy", Float.valueOf(loc.getAccuracy()));

tm.getNetworkCountryIso();

The primetime settings have defaults that are set in tv.alphonso.utils.PreferencesManager :

public static final String ACS_EVENING_PRIME_TIME_BEGIN_DEFAULT = "19:00";

public static final String ACS_EVENING_PRIME_TIME_END_DEFAULT = "22:00";

public static final String ACS_MORNING_PRIME_TIME_BEGIN_DEFAULT = "06:00";

public static final String ACS_MORNING_PRIME_TIME_END_DEFAULT = "09:00";

So from here we can assume that the app has specific times and device attitudes that will allow it to commence the audio capture process.

When the game app is first started, the SDK embedded inside gets an updated and obfuscated database file called acr.a.2.1.4.db.zero.mp3 (note the mp3 file extension). The assumption here is that this file contains a list of fingerprints that can be compared against any new ones captured by the SDK with the intent of finding a match.

The head of the file looks like this:

00000000 41 6c 70 68 6f 6e 73 6f 41 43 52 20 20 20 20 20 |AlphonsoACR |

00000010 00 00 00 00 06 31 2e 34 2e 30 00 55 01 00 00 1a |.....1.4.0.U....|

00000020 32 30 31 33 2d 31 30 2d 31 31 20 31 33 3a 33 31 |2013-10-11 13:31|

00000030 3a 31 37 20 2d 30 34 30 30 00 29 35 62 34 66 30 |:17 -0400.)5b4f0|

00000040 31 33 33 31 33 64 32 66 32 64 31 33 30 65 36 39 |13313d2f2d130e69|

00000050 64 63 35 61 30 64 32 65 63 38 61 64 62 31 32 35 |dc5a0d2ec8adb125|

00000060 63 35 37 00 68 00 00 00 ba 00 00 00 00 04 00 00 |c57.h...........|

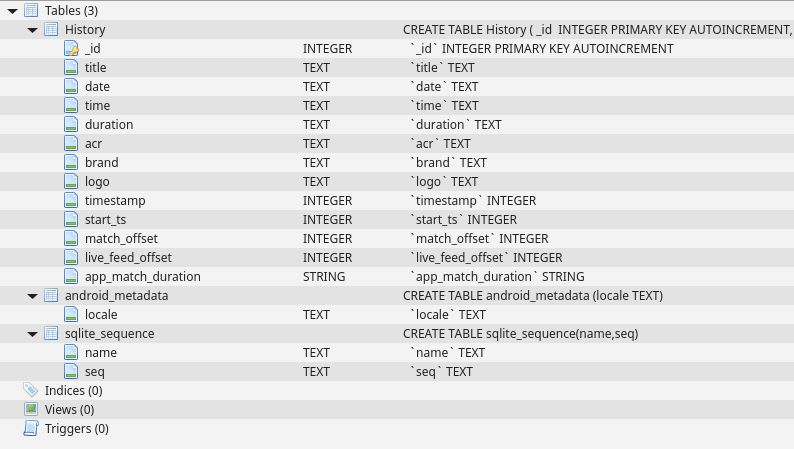

The encrypted database file probably has a schema that conforms to this but it may just be a list of time specific fingerprints:

Some key parameters were also set when the app is first run, this time in the following file found in the app storage directory: alphonso.xml (redacted. n.b.: dev-id and advertising-id have same values)

<map>

<int name="ad_id_poll_duration" value="1800" />

<float name="location_accuracy" value="5.3096943" />

<string name="uuid">XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX</string>

<int name="clock_sync_saved_iterations" value="5" />

<string name="clock_skew_server_name">clockskew.alphonso.tv</string>

<float name="location_latitude" value="37.23414" />

<long name="location_time" value="1541553213743" />

<int name="acr_mode" value="2" />

<string name="server_domain">http://tkacr258.alphonso.tv</string>

<boolean name="audio_file_upload_timedout_flag" value="false" />

<string name="location_provider">network</string>

<float name="location_longitude" value="-120.46891" />

<boolean name="history_flag" value="true" />

<long name="capture_sleep_time" value="1" />

<boolean name="audio_file_upload_flag" value="true" />

<int name="capture_scenario_count" value="0" />

<string name="dev_id">XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX</string>

<int name="capture_count" value="3" />

<int name="clock_sync_poll_interval" value="600" />

<float name="capture_scenario_sleep_inhibiter_increment" value="2.0" />

<string name="acr_db_filename">acr.a.2.1.4.db.zero.mp3</string>

<string name="android_id">XXXXXXXXXXXXXXXX</string>

<string name="advertising_id">XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX</string>

<string name="server_port_ssl"></string>

<boolean name="capture_power_optimization_mode" value="true" />

<long name="capture_scenario_sleep_interval" value="8" />

<long name="capture_duration_ms" value="4000" />

<int name="acr_shift" value="0" />

<float name="location_altitude" value="44.54821" />

<long name="capture_scenario_sleep_interval_livetv_match" value="60" />

<string name="acr_db_file_dir">/data/user/0/com.fgl.adrianmarik.victoriaaztecsfree/files</string>

<int name="capture_prebuffer_size" value="0" />

<int name="db_max_records" value="1000" />

<string name="acr_db_file_abs_path">/data/user/0/com.fgl.adrianmarik.victoriaaztecsfree/files/acr.a.2.1.4.db.zero.mp3</string>

<float name="location_speed" value="0.09888778" />

<boolean name="record_timeouts_flag" value="false" />

<string name="server_domain_ssl"></string>

<string name="server_port">4432</string>

<boolean name="limit_ad_tracking_flag" value="false" />

<long name="capture_scenario_sleep_interval_max" value="160" />

<string name="alp_uid">XXXXXXXXX</string>

<long name="location_poll_interval" value="15" />

</map>

In the test conducted, the device was kept stationary, the GPS location was spoofed to a random locale in the USA and the phonetic alphabet (alpha, bravo, charlie, …, zulu) was spoken aloud.

The recording of audio is initiated by the background running AlphonsoService which creates tv.alphonso.audiocaptureservice.AudioCaptureService that is in charge of the recording functions (acrMode is set in the above XML file to 2: SplitACR).

public void startRecording() {

this.mRecorderThread.startRecording(this.mCaptureInstance);

}

public void enableAcr(int acrMode) {

if (this.mRecorderThread != null) {

AudioCaptureClient captureClient;

switch (acrMode) {

case 1:

captureClient = new LocalACR();

break;

case 2:

captureClient = new SplitACR();

break;

case 4:

captureClient = new DualACR();

break;

case 8:

captureClient = new ServerACR();

break;

default:

Log.e(TAG, "Invalid acrType: " + acrMode + ". Cannot instantiate AudioCaptureClient.");

return;

}

if (acrMode != 8) {

((LocalACR) captureClient).setOnBoardAudioDBFilePath(this.mOnBoardAudioDBFilePath);

((LocalACR) captureClient).setOnBoardAudioDBFileDir(this.mOnBoardAudioDBFileDir);

((LocalACR) captureClient).setAcrShift(this.mAcrShift);

}

captureClient.setRecordTimeouts(this.mRecordTimeouts);

captureClient.init(this.mDeviceId, this.mContext, this.mAudioFPUploadService, this.mAlphonsoClient, this);

captureClient.setAudioFileUpload(this.mAudioFileUpload);

captureClient.setAudioFileUploadTimedout(this.mAudioFileUploadTimedout);

captureClient.mClockSkew = this.mClockSkew;

this.mRecorderThread.addClient(acrMode, captureClient);

}

}

The recorder thread sets some parameters that control the way the audio is recorded from the microphone. tv.alphonso.audiocaptureservice.RecorderThread has some relevant code:

private static final int RECORDER_AUDIO_BYTES_PER_SEC = 16000;

private static final int RECORDER_AUDIO_ENCODING = 2;

private static final int RECORDER_BIG_BUFFER_MULTIPLIER = 16;

private static final int RECORDER_CHANNELS = 16;

private static final int RECORDER_SAMPLERATE_44100 = 44100;

private static final int RECORDER_SAMPLERATE_8000 = 8000;

private static final int RECORDER_SMALL_BUFFER_MULTIPLIER = 4;

The enableACR method calls tv.alphonso.audiocaptureservice.SplitACR which extends LocalACR which extends AudioCaptureClient. These functions set up the analysis of any audio captured by sending the raw audio data to an included native library called libacr.so. Here the fingerprint generation is called via the method acrFingerprintOctet(…) which returns a byte array.

public byte send(byte[] bytes, int numBytes, int sampleRate) {

if (this.mFpStart == 0) {

this.mFpStart = SystemClock.elapsedRealtime();

}

byte[] fingerPrint = acrFingerprintOctet(this.mLocalAudioMatchingToken[this.mCurrentTokenIndex], bytes, numBytes);

if (!(fingerPrint == null || fingerPrint.length == 0)) {

if (this.mFpStart != 0 && this.mFpEnd == 0) {

this.mFpEnd = SystemClock.elapsedRealtime();

this.mFpDelay = this.mFpEnd - this.mFpStart;

if (AudioCaptureService.debug) {

Log.d(TAG, "Delay = " + Utils.getDurationAsString(this.mFpDelay) + "; Fp-size = " + fingerPrint.length + " for token: " + this.mToken + " timestamp: " + this.mAudioBufferTimestampGMT);

}

}

sendFingerprint(fingerPrint, sampleRate);

this.mFpStart = 0;

this.mFpEnd = 0;

}

return (byte) 2;

}

With a possible fingerprint generated by the above code, it is forwarded to the sendFingerprint(…) method which bundles the data with some other information and then forwards it via tv.alphonso.alphonsoclient.AudioFPUploadServiceV2:

public void sendFingerprint(byte[] fingerPrint, int sampleRate) {

if (Utils.isNetworkAvailable(getContext())) {

this.mNumFPsSent++;

if (this.mAudioFPUploadService != null) {

Message msg = this.mAudioFPUploadService.mHandler.obtainMessage();

Bundle params = new Bundle();

params.putInt("fp_ctr", this.mNumFPsSent);

params.putString("device_id", this.mDeviceId);

params.putByteArray("fingerprint", fingerPrint);

params.putFloat("sample_rate", (float) sampleRate);

if (this.mNumFPsSent == 1) {

if (AudioCaptureService.debug) {

Log.d(TAG, "Sending AUDIO_FP_CAPTURE_START request to AudioFPUploadService for token: " + this.mToken);

}

params.putInt("capture_id", this.mCaptureInstance.mCaptureId);

msg.what = 4;

long timestamp = this.mCaptureInstance.mStartTimestampGMT + this.mClockSkew;

if (AudioCaptureService.debug) {

Log.d(TAG, "actual: " + this.mCaptureInstance.mStartTimestampGMT + "; ClockSkew: " + this.mClockSkew + "; new: " + timestamp);

}

params.putLong("timestamp", timestamp);

params.putLong("capture_time", this.mAudioCaptureService.mCaptureDuration);

} else {

params.putLong("timestamp", this.mAudioBufferTimestampGMT + this.mClockSkew);

msg.what = 5;

}

params.putString("token", this.mToken);

params.putBoolean("record_failure", this.mRecordTimeouts);

msg.setData(params);

this.mAudioFPUploadService.mHandler.sendMessage(msg);

return;

}

return;

}

Log.e(TAG, "Network unavailable.");

}

The above series of functions deals with the explicitly named task of generating and uploading fingerprints. These are not legible audio data files but instead are proprietary signatures formed by analysing audio for features. However, the file tv.alphonso.audiocaptureservice.LocalACR includes a function of interest that will send the filenames of the raw audio files to a function in tv.alphonso.alphonsoclient.AlphonsoClient.

public void uploadAudioFileIfRequired(String resultSuffix) {

if (getOnBoardAudioDBFileDir() == null) {

return;

}

if ((isAudioFileUpload() && getSuccessResultSuffix() != null) || (isAudioFileUploadTimedout() && getSuccessResultSuffix() == null)) {

String suffix;

Bundle params = new Bundle();

params.putString("device_id", this.mDeviceId);

params.putString("start_time", this.mCaptureInstance.mStartTimeYYMMDD);

params.putString("acr_type", getAcrType());

params.putString("token", this.mToken);

if (getSuccessResultSuffix() != null) {

suffix = getSuccessResultSuffix();

} else {

suffix = resultSuffix;

}

params.putString("result_suffix", suffix.replace(' ', '_').replace('&', '_'));

params.putString("filename", getOnBoardAudioDBFileDir() + "/" + this.mLocalAudioMatchingToken[this.mCurrentTokenIndex] + ".audio.raw");

Message msg = this.mAlphonsoClient.mHandler.obtainMessage();

msg.what = 8;

msg.setData(params);

if (AudioCaptureService.debug) {

Log.i(TAG, "Sending AUDIO_CLIP_UPLOAD message to AlphonsoClient Service");

}

this.mAlphonsoClient.mHandler.sendMessage(msg);

}

}

The following line is of particular interest as it refers to the raw audio files that are stored on the device at the location referred to in the Alphonso.xml file shown above.

params.putString("filename", getOnBoardAudioDBFileDir() + "/" + this.mLocalAudioMatchingToken[this.mCurrentTokenIndex] + ".audio.raw");

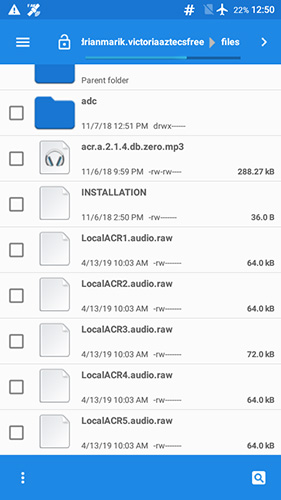

These raw audio files are located in the device storage allocated for the app.

The message object containing the raw audio filenames is then sent to a function in the file tv.alphonso.alphonsoclient.AlphonsoClient. Several key instructions are listed below (debug is hardcoded to false):

private void processAudioFileUploadRequest(android.os.Bundle r14)

tv.alphonso.utils.Utils.isNetworkAvailable(r8);

r13.getAlphonsoServerUrl();

r4 = new java.io.BufferedInputStream;

r8 = new java.io.FileInputStream;

r9 = "filename";

r9 = r14.getString(r9);

r8 = "audio_file_contents";

r8 = r13.getAudioFileUploadFilename(r8, r9, r10, r11)

r5.put(r8, r0);

r7 = new java.lang.StringBuffer;

r8 = "/debug/audio/capture?filename=";

r7.append(r8);

r8 = debug;

if (r8 == 0) goto L_0x00cb;

r6 = new android.os.Bundle;

r13.invokeRESTApi(r7, r5, r6);

The invokeRESTApi method above calls the invokeGenericRESTApi(2, uri, params, resultData) method which hardcodes the int request as “2”. This is then sent to the tv.alphonso.alphonsoclient.RESTService and its handleMessage(…) function which sets some web POST parameters and byte arrays derived from “samples” or “audio_file_contents” and then sends the request via an org.apache.http.impl.client.DefaultHttpClient object.

case 2:

request2 = new HttpPost();

request2.setURI(new URI(action.toString()));

HttpPost postRequest = (HttpPost) request2;

if (action.toString().indexOf("audio_clip_update") != -1) {

postRequest.setEntity(new ByteArrayEntity((byte[]) params.get("samples")));

} else if (action.toString().indexOf("/audio/capture") != -1) {

postRequest.setEntity(new ByteArrayEntity((byte[]) params.get("audio_file_contents")));

} else {

LinkedHashMap<String, Object> locBundle = null;

if (params.containsKey("location")) {

locBundle = (LinkedHashMap) params.remove("location");

}

JSONObject jobj = new JSONObject(params);

if (locBundle != null) {

jobj.put("location", new JSONObject(locBundle));

}

String jsonString = jobj.toString();

if (AlphonsoClient.debug && params.containsKey("fb_uid")) {

Log.d(TAG, "FB Registration request message: " + jsonString);

}

postRequest.setEntity(new StringEntity(jsonString));

postRequest.setHeader("Content-Type", WebRequest.CONTENT_TYPE_JSON);

}

request = request2;

break;

(...)

HttpResponse response = client.execute(request);

Some other relevant parts of the code are found in files such as tv.alphonso.alphonsoclient.AlphonsoClient which sets the names of the raw audio files that will be stored and can be uploaded to the server:

if (args.getBoolean("audio_file_upload")) {

params.put("filename",

getAudioFileUploadFilename(this.mDevId, args.getString("start_time"),

args.getString("acr_type"),

args.getString("result_suffix")));

}

public String getAudioFileUploadFilename(String deviceId, String startTime, String acrType, String resultSuffix) {

StringBuffer filename = new StringBuffer();

filename.append("android");

filename.append("-");

filename.append(deviceId);

filename.append("-");

filename.append(this.mAlphonsoUid);

filename.append("-");

filename.append(startTime);

filename.append("-");

filename.append(acrType);

filename.append("-");

filename.append(resultSuffix);

filename.append(".audio.raw");

return filename.toString();

}

The sequential numbering provides for a theoretical maximum of ~20 seconds of raw audio spread over five files if each file is conformed to 64kb and consists of PCM data with a sample rate of 8kHz. The file tv.alphonso.audiocaptureservice.LocalACR also sets the unique, sequential part of the filenames and has an upload audio file function:

protected String[] mLocalAudioMatchingToken = new String[]{"LocalACR1", "LocalACR2", "LocalACR3", "LocalACR4", "LocalACR5"};

Audio

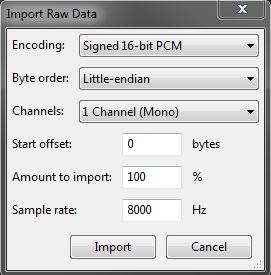

After the above test has been run the captured raw audio files are copied across from the mobile device to a computer running Audacity. Each of the raw audio files are just that, raw data representing Pulse Code Modulation (PCM) audio. PCM is a way of storing the results of an analogue signal to digital data conversion where the value (bit depth) of the amplitude at a given time (1 / sample rate) is recorded.

The raw audio has no file header information to instruct any computer program what the format is so some manual settings are used at the import stage in Audacity. Interestingly, when the sample rate of 8kHz is used, either as a result of a downsampling process in the SDK or set by the original AudioRecord method, the three raw audio files are 64kB in size which correlates to the maximum size of an IP packet over IPv4.

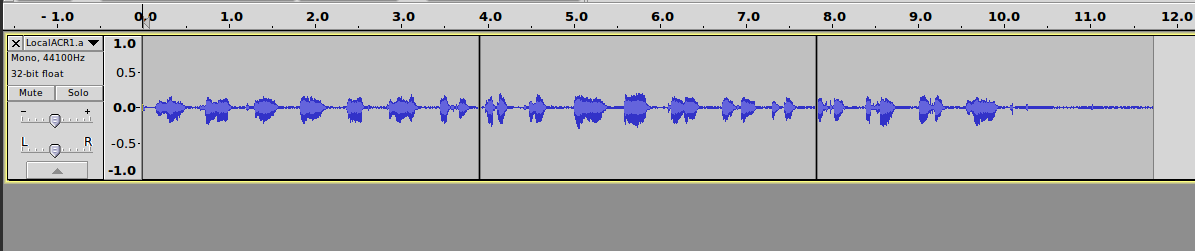

The imported audio waveform consisting of three raw files providing 10 seconds of legible audio looks like this, where its easy to spot the blocks of measured spoken phonetic alphabet occuring.

The three raw audio files stored on the device are joined and saved as an mp3 for legible playback: 3-step-join-speed-redux.mp3

Original five raw audio files (no headers) as recorded by the app SDK (each 64kb): LocalACR1.audio.raw

Conclusion

The Alphonso SDK included in the free game downloaded from the Google Play store:

- did inform the user of its intentions

- did give the user the option to switch off the audio record function.

- did record ten seconds of human speech and save it as three raw files with specific, sequential filenames.

The SDK has several options, settings and functions that indicate that it:

- can upload raw audio files that have the same sequential filenames to server(s) attached to sub-domains.

- does have the option to upload audio fingerprints to their servers.

One theory as to what occured during this demonstration is the SDK recorded some audio at the nominal 44.1kHz to five raw audio files, created a fingerprint file from that audio, attempted to match it with its own on-device database, failed to find a match and then finally down-sampled the original audio to 8kHz ready for uploading to the servers along with the fingerprint file. A possible reason for doing this last step is so that the audio could be identified either via some computational method at the server, or via mechanical Turks listening for key descriptive features so that this new audio and fingerprint could be added to the database.

Further, the game app has an integration of at least 16 tracker SDKs while the Alphonso SDK itself has an integration with Facebook. It also collects location information as well as device specific identifying information. While the app used in this demonstration was last updated in 2017 (Version: 1.0.16), it still successfully connects and posts data to a number of servers as well as downloads various files.

Future

The demonstration examined here was run using a mobile device that had GPS spoofing enabled, however querying its network location would have revealed the local ISP’s assigned IP address. A future run should either be located in the US or be connected to a proxy that exits in the US.

Alphonso as of late appears to have moved away from the “smart phone” software area and into the “smart TV” area with partnerships that hint at utilising both TV apps and hardware to identify the audio from television broadcasts, streaming videos and gaming content.

Below is an image from the latest marketing materials from Alphonso showing associations with other data providing companies:

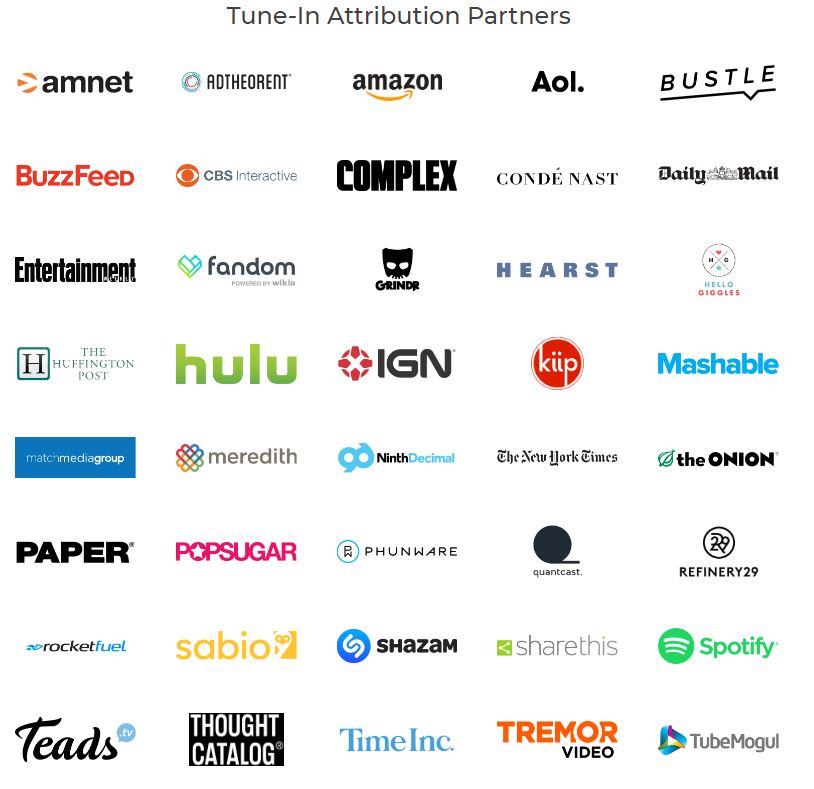

Below is an image from Alphonso showing “Tune-In” attribution partners:

In 2015 Alphonso filed for a patent titled “Efficient Apparatus and Method for Audio Signature Generation Using Motion”. A similar patent was filed at the same time where the difference was for an Audio Threshold feature that would set recording parameters. The first patent’s abstract is below:

An automatic content recognition system that includes a user device

for the purpose of capturing audio and generating an audio signature.

The user device may be a Smartphone or tablet. The system is also

capable of determining whether a user device is in motion and

refraining from audio monitoring and/or generating audio signatures

when the user device is in motion. Motion may also be used to reduce

the frequency of audio monitoring and/or signature generation. The

system may have a database within the user device or the user device

may communicate with a server having a database that contains reference

audio signatures.